ReGraT

A System for Redirected Grabbing and Touching in Virtual Reality

Premise

A key missing part of Virtual Reality is grabbing with haptic feedback. In the real world we explore

things by taking them into our hands. We rotate them, bring them closer to our face. As a university

project we created ReGraT. In a team of two we explored the use of motion tracking and a 3D printed custom

controller to simulate haptic feedback. For the project two major algorithms were developed. A skeleton

solver and a redirection algorithm. The skeleton solver creates a virtual representation of the hand from

motion tracking data. To feel a haptic feedback whilst grabbing objects of different size, we redirect the

virtual fingers to during the grabbing motion. The player will always touch the same physical controller

whereas in the virtual world the hand will be touching objects of different sizes.

Let's see how

these two algorithms work.

Skeleton Solver

A skeleton solver is an algorithm that usually takes a set of markers and derives a set of bones to animate from it. This algorithm is typicall applied to a set of markers on a motion capture suit. But for ReGraT we developed our own skeleton solving algorithm for hand bones only.

A typical grabbing motion will use the index finger and the thumb on the opposite site. Imagine grabbing a glass of your favourite beverage. The other fingers of the hand will follow the index finger. So solving the index finger and thumb will suffice for an accurate representation of a grab.

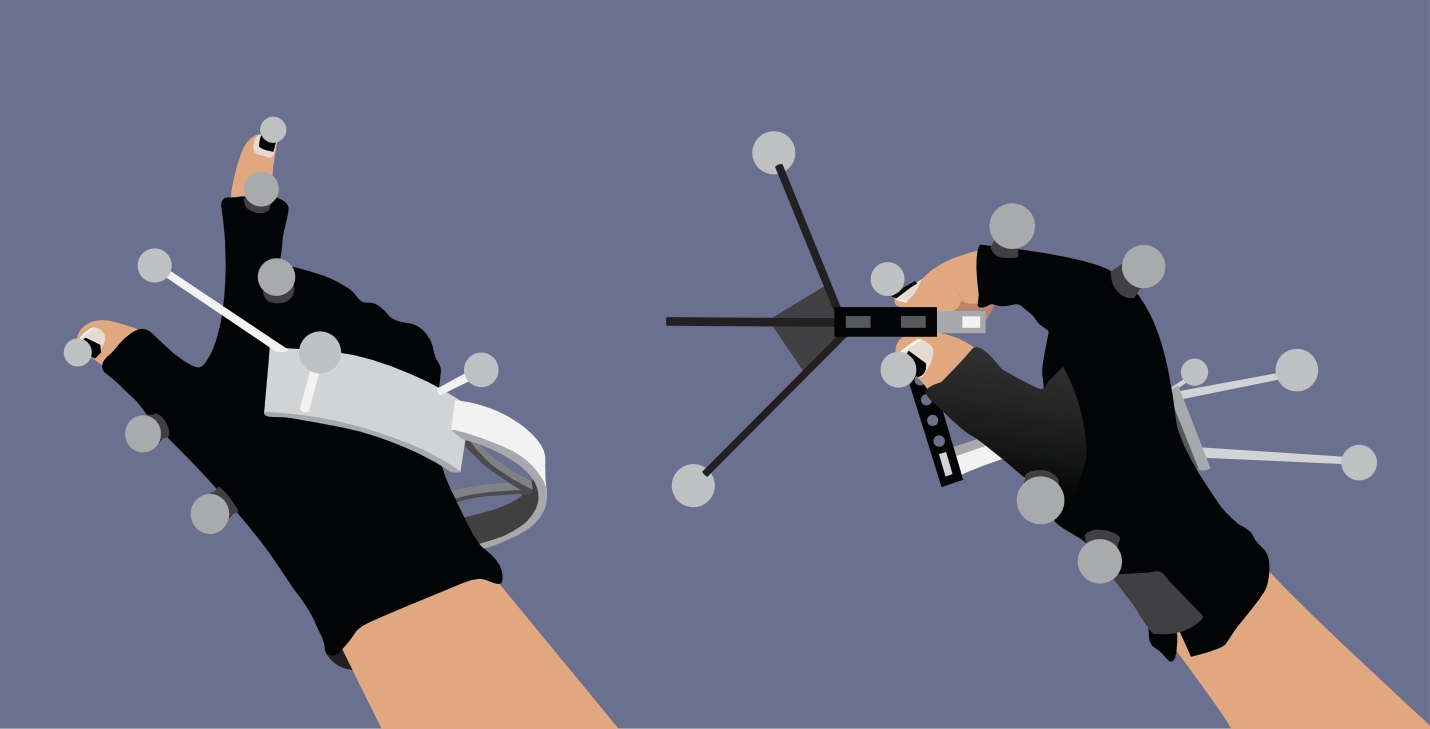

Tracking setup with retro reflective

markers

Tracking setup with retro reflective

markers

To solve the skeleton we use a calibration phase in the beginning. During this phase the user will strike hand poses as shown in the image above. In the pointing pose we can determine the markers for the index finger and the thumb by sorting from left to right. Then we sort for distance of the markers from the center of the back of the hand. This will give us the markers for each finger section. After this calibration phase there is no complex recalculation each frame. Our motion tracking cameras have a framerate of 120 fps, which lets us compare the previously solved markers with the currently unsolved makers of the current frame. Each old marker will be replaced with the closest new marker. This results in a solved skeleton for each frame. For normal and even fast motion this method is robust enough to not loose track of the skeleton structure.

Redirection

To create real haptic feedback we use a 3D printed controller that sits right at the point where thumb and index finger meet. It is attatched to the back of the hand so it moves with the hand gestures at all times. We cannot change the physical size of the controller to accomodate different sizes of virtual objects. Our solution to this problem was to manipulate the virtual grabbing motion to match the moment of virtual an real touch. We can calculate the distance of the tip of the finger to the physical controller and match this distance in the virtual world no matter how big the virtual object is. For larger virtual objects this can look weird because the hand flips open too wide. We fixed this by reducing the redirection distance the bigger the virtual object gets.

The Game

To test our method of recreating haptic feedback in virtual reality we created a small VR game. The player is situated in a futuristic spaceship and is given the task of sorting energy containers of different shapes into the matching receiver.

View of the player during a game session.

View of the player during a game session.

Limitations and summary video

Due to the fact that the Optitrack motion captuiring system is built to be used for whole body motion we lack precision especially with the number of markers used in such a small space. Additionally we often occlude these markers with our own body parts. A possible solution to this problem could be to transfer the motion of visible markers to temporarily obstructed markers until they are visible again by the tracking cameras. Below you can see a summary video of the ReGraT system.